Course Overview

CISC 484/684 – Introduction to Machine Learning

Logistics

Instructor: Sihan Wei

- Incoming first-year CS PhD student

- Research interests: ML & Optimization

- designing ML algorithms with provable learning guarantees

- figuring out how to best optimize it

- Outside of work:

- Badminton, reading, running

- Personal website: https://sihanwei.org

- Email: sihanwei@udel.edu

Course structure

- Lectures: T-Th, 1:15–3pm, SHL 105

- Course website: https://sihanwei.org/cisc484-su25/

- We will share course materials here. Please check the website frequently for lecture notes, suggest readings, etc.

- Canvas: For submitting homework, gradings and asking questions.

Topics (tentative)

Conventional machine learning topics

- ML Foundations

- Linear Regression

- Optimization Basics

- Logistic Regression

- SVM

- Kernel Methods

Ensemble Methods

- Decision Trees

- Random Forests

- Boosting

Deep Learning

- Perceptron and MLP

- Deep Learning Basics

- CNN

- Generative Models

Unsupervised Learning

- PCA

- Gaussian Mixture Models

- EM

Course Objectives

Upon successful completion of this course, you should be able to:

- Recognize the contexts and consequences of applying machine learning methods in applications. (When/Where)

- Understand the fundamentals of machine learning techniques. (Why)

- Apply and implement machine learning techniques in applications. (How)

- Evaluate machine learning results in different settings. (Metrics)

Evaluation Breakdown

| Component | Count | Weight | Notes |

|---|---|---|---|

| Homework | 4 | 48% | 12% each. Late policy applies. |

| Knowledge Checks | 6 | 12% | 2% each. In-class. |

| Midterm Exam | 1 | 20% | Covers first half. |

| Final Exam | 1 | 20% | Covers second half. |

| Total | 100% |

Homework Assignments

- Expect roughly one assignment every 12 days.

- Each assignment will include both analytical and programming components.

- Collaboration is strongly encouraged, but your final write-up must be your own work.

- You must acknowledge all collaborators (if any) for each problem.

Late Policies

- You have a total of 72 late hours available for the course.

- Late hours are rounded up to the nearest hour — for example, if you submit an assignment 20 minutes late, it will count as 1 full late hour.

- Once you have used up all your late hours, any further late submissions will not be graded.

The Use of AI Tools

- There are now online AI-based tools that write code for you, such as ChatGPT, Claude, Gemini, etc. They are very cool, but should not be used for your assignments. Asking an AI to complete an assignment means you are not doing the work yourself.

- You are allowed to ask GPT-like tools in your learning process. However, be aware that answers generated by AI tools often contain factual errors and reasoning gaps.

Exams

- Midterm Exam: In class on July 15.

- Final Exam: In class on August 14 (last day of class);

- Exams are closed-book; no cheatsheets allowed.

- Focus will be on high-level conceptual understanding—no formula memorization required.

Knowledge Checks

- You will receive 50% of the points (i.e. 1% per quiz) as long as you submit a reasonable attempt, regardless of correctness.

- If you score 6 out of 10 or higher, you will receive the full 2% for that quiz.

- These checks are primarily for participation and engagement. The goal is to reinforce key concepts and keep the learning process active.

Why Studying This Course?

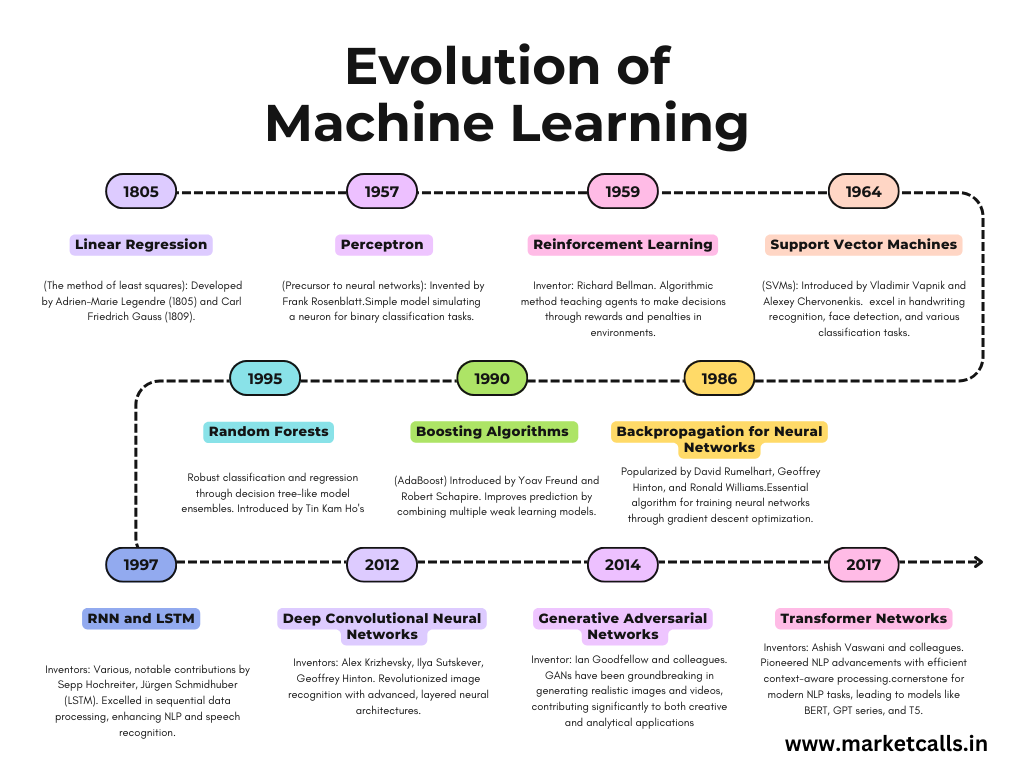

Evolution of Machine Learning

Let’s ask ChatGPT

Why study “Introduction to Machine Learning”, especially in the era of LLMs? Please answer in one sentence.

Because an intro ML course grounds you in core ideas—data representations, training objectives, optimization, generalization—that demystify LLMs and give you the conceptual tools to critique, adapt, and push such advanced models further instead of treating them as black-box magic.

What is Machine Learning?

A Few Definitions

- A set of methods that can automatically detect patterns in historical data, and then use the uncovered patterns to predict future data

- Using experience to gain expertise

- Fitting a function to data

ML is …

learning from data (experience)

Three components

- Data or “experience”

- Method, model, hypothesis, or function

- A computational approach that combines the two

How Do We Represent Data?

Data is complex.

| Input (X) | Function f() | Output (Y) |

|---|---|---|

|

Image Classifier | "cat" |

| Play Audio | Speech to Text | "We will make America great again!" |

| A girl playing with a dog in a grassy field | Generative Model |  |

How does a Machine “See” Data?

High dimensional vectors

A learning example is a vector of length d

Examples drawn from an underlying distribution \[ x_i\in\mathbb{R}^d \]

Each dimension represents a feature

A collection of N examples \[ D=\{x_i\}_{i=1}^N \]

Data Features

- Designing feature functions is critical

- Well-designed representations can significantly impact model performance.

- How should we design features?

- Features are application-specific.

- Domain knowledge is often essential — e.g., biology, vision, speech, etc.

- Features are application-specific.

Example

Suppose you’re building a spam email classifier:

- Raw input (X): Subject line and email body text

- Possible features:

- Number of times “free” appears

- Whether the sender is in your contact list

- Ratio of uppercase letters to total characters

- Presence of suspicious links

- Number of times “free” appears

These features help the model distinguish between spam and legitimate messages.

Three components

- Data or “experience”

- Method, model, hypothesis, or function

- A computational approach that combines the two

Genres of ML

- Supervised Learning: labeled data, predict Y from X

- Unsupervised Learning: no labels, uncover structure in X

- Semi-Supervised Learning: partially labeled data (Not covered in our class)

- Reinforcement Learning: learn via actions + rewards (Not covered in our class)

Supervised Learning

- Data/experience: every example has both features and labels

- Images labeled as “cat” vs. “not cat”

- Text of Yelp reviews and Yelp rating

- Lots of “experience”

- Model: trained to input features and output (a distribution over) labels

- Labels could be discrete (classification) or continuous (regression)

Unsupervised Learning

- No labels, lots of raw data

- Find patterns, learn \(p(X)\)

- Examples:

- Clustering

- Generative models

- Self-supervised learning

When Should We Use ML?

- Is the problem well-defined?

- Do we have high-quality data?

- Can we measure success clearly?

- Do we really need ML?

Questions & Discussion

What are you most excited about?

- Implementing ML algorithms?

- Cool applications?

- Connecting ML to your research?